Like so many knowledge workers, we’ve spent hours learning about and playing with the newest generative technologies from Google and OpenAI. We’re invested in these technologies and their capabilities, not only for their impact on our day-to-day work (and that impact is going to be enormous!), but also for the power they have to transform our customers’ businesses. The current pace of growth of AI is exponential, and the next generation of AI is expected to disrupt conventional practices and transform numerous industries.

After speaking with folks ranging from engineers to executives, and spanning every industry over the last few months, we’re sharing some guiding principles for how we think about use of these technologies and highlights ofwhere we believe it’s being done well.

For the unindoctrinated, A Brief History of Generative AI and The Generative AI Life-Cycle, by Matt White and Ali Arsanjani, respectively, are great primers.

And now we find ourselves in a bit of an arms race, in which product backlogs are growing long, launch events attract non-tech media, and there’s a proliferation of companies that ‘do old things, now powered by generative AI’ and ‘do new things, made possible by generative AI’. We now have text, audio, image, and video generation, to name a few.

So, how should we think about this movement?

First, we must discuss what we mean by ethical and responsible AI (used interchangeably here).

Google’s AI team outlines seven guiding principles, which are their ‘commitment to develop technology responsibly and establish specific application areas we will not pursue’:

1. Be socially beneficial.

2. Avoid creating or reinforcing unfair bias.

3. Be built and tested for safety.

4. Be accountable to people.

5. Incorporate privacy design principles.

6. Uphold high standards of scientific excellence.

7. Be made available for uses that accord with these principles.

For our purposes, let’s discuss the first five — the last two are more related to how AI technologies will be developed and made available. Perhaps it’s best to rephrase these for the enterprise user:

- AI is a tool, and when applied to the enterprise, should be used for a tightly scoped problem domain

- The edge provider of the technology is the one responsible for the use and behaviors resulting from it. For example, if company.io embeds a chatbot on its site, any response given by the bot on that site is the responsibility of the company (not legal advice, but customer perception advice).

- End users of AI should be made aware of the use of AI in their interactions.

- User inputs are subject to the same protections and requirements that would otherwise be applicable when handling user data.

- AI should improve customer experiences, not merely add a new distraction.

Is anyone actually doing this? Where would we even begin?

Our advice is to begin with the user experience – and this is where Google’s demo hit the mark.

In this example, the core customer (user) experience is that of shopping and buying from a furniture retailer. The obvious customer benefit is context awareness about the customer’s purchase history and the dialogue-based interface, with opinionated recommendations. In order to achieve the demo, several capabilities are brought together, rather elegantly:

1. Natural language understanding to comprehend the user’s intent and respond intelligently

2. CRM integration to know the user and previous purchases

3. Integrations with Google Maps

4. Integrations with payments

5. Product and location inventory data integration to know what’s available and in which store

6. Generative capability to determine that some chairs are a better match for the table

7. Generative image capability

8. Low/No code website update

9. Virtual agent’s playful tone

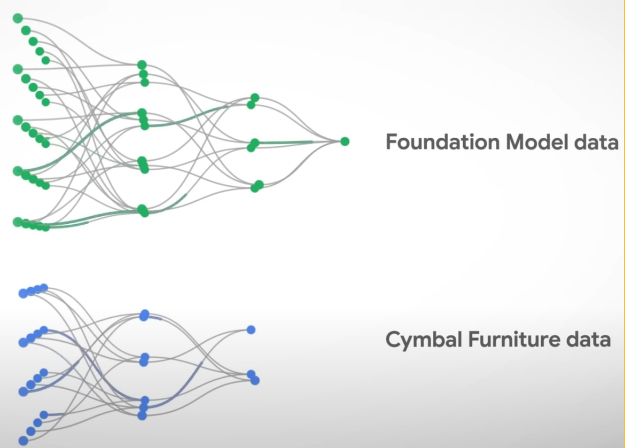

However, what has been overlooked by many is Google’s explicit indication to the following:

1. Training and alignment of the foundational model

2. Quality and tone from the contextual model

3. Clearly communicating to the user which model is providing the response (From knowledge tag)

4. When an image is AI generated (AI image tag)

5. Who owns the data

6. The administrative activities and privacy of the users and their interactions with the model.

Ultimately, these will determine whether a generative AI will be successfully used for customer interactions.

The most commonly quoted pattern is the following: pick your favorite generative AI chatbot and let it crawl every digital asset in your organization. Now you can ask it anything and everything, whether in scope of the org or out of scope. What can go wrong?

We suspect the reason this is not more prevalent today is simple: no enterprise’s legal or compliance department would allow it. Of course, the challenge here is distinguishing between internal and external scopes, without a high level of certainty and complete transparency about where the response is coming from, ie. which context is used. Because most businesses would not tolerate the uncertainty of lower confidence responses, this method is largely unacceptable for enterprise use cases.

Another major consideration is what exactly is being crawled. If one allows for every digital asset in a company to be learned, they open themselves up to traditional search security risks. Historically, early adopters of enterprise search appliances have unintentionally surfaced sensitive HR and strategy assets.

One pattern we’ll likely see in the coming months is a public site scoped AI. Text and media content businesses can provide responses that otherwise would require parsing many sites, aggregating and distilling information. The caveat here is scope matters.

Okay, so you’re saying the Fortune 100 companies haven’t put a ChatGPT chat plugin on their support pages. If not there, where can they be used?

The question many of our customers will face is when and when not to include Generative AI techniques in their solutions. In the above example, best case scenario ChatGPT can invent an innovative way of solving a problem (which is not documented in their support pages). Worst case, this innovative solution might mislead to otherwise respond in an unexpected manner, jeopardizing customer experiences. So how do we balance the two? Will Human In The Loop (HITL) help? Can HITL scale? Should we have another AI agent to “test” the output of the first agent (like GANs)?

Let’s take a lesson from CCAI, Google’s contact center solution powered by Dialogflow. CCAI has a feature called Agent Assist, which serves relevant content to a live agent (human employee in a contact center) to aid in expedient resolution of a customer’s request. This pattern, if replicated, would allow employees to utilize these tools to provide a better user experience, without directly exposing the capability to customers.

The same is true of Document AI, Google’s solution for document processing. Here, we again have the concept of HITL, which involves having a Human In The Loop to do the quality check on the extracted data by Document AI.

So where do we go from here?

We do not yet know the total impact these new tools will have, but we know they have the power to super-charge productivity, and eventually deliver experiences that we can’t imagine today. As early adopters and advisers to thousands of businesses globally, we are taking steps to democratize generative AI for all Onix employees:

1. All are able to submit suggestions for use-cases, which will be reviewed regularly and some will be made into a POC and taken to market

2. All are permitted access to build accelerators for internal as well as customer delivery use, however not for direct customer interactions (yet)

3. All will be trained on fundamentals of generative AI, and responsibilities associated

4. All customer-facing employees will be able to demonstrate to our customers how to get the most value from existing products, such as Workspace and CCAI that will be augmented with generative features